Here’s what Wikipedia tells us about big data:

Big data refers to data sets that are too large or complex to be dealt with by traditional data-processing application software. Data with many fields (rows) offer greater statistical power, while data with higher complexity (more attributes or columns) may lead to a higher false discovery rate.

This term actually means different things to everyone. But what the definition clearly explains is that ordinary data grow big when conventional methods and software become too weak to process them.

In this article, we won't dive deep into big data but hit the high spots. You can learn more from Vladimir Krasilshchik's Anti-Introduction to Big Data. Though this video is more than 5 years old so far, it helps you understand what big data is. Briefly, here are some facts about big data:

- Lots of data as a result of redundancy, immutability, and replication.

- Flexible storage and variety of requests allowed (Cassandra, HDFS, Amazon S3, ...).

- Reliable data recording (Apache Storm, Spark Streaming, Apache Kafka, ...).

- Powerful analytical tools (Hadoop, Spark, ...) that can provide answers based on all data processed.

- «Gives a headache» (c).

These are certainly not sufficient conditions for data to be big, but they—especially the last point—give a great presentation.

For you to realize how important big data is to IT, you might want to check some historical data and view how the amount of data grew globally. In 2013, the total amount of data stored in the world was 1.2 zettabytes (zetta- – 1021). IDC forecasted that the total amount was to dobule every two years and make around 40 zettabytes by 2020. There is even a special term for this rapid increase in data volume, the – information explosion. Today, IDC estimates this volume at 59 zettabytes and predicts it will grow to 163 zettabytes by 2025. Most of the data that is generated today comes not from people but from various kinds of devices (IoT and others) during their interaction with each other and with data networks. Go to this text if you want to learn more about the current situation.

Data has become the new oil in the last few years. And while they carry little value in the raw form, they turn into a gold mine for every company once processed. Most businesses have long since figured this out and make a very good living off of us. Some of them even pretend they care about our security. But don't be so naive and remember, it's just their business.

But let's not be too blue about it. Discussing the definition of what big data is and reviewing the market condition may take too much time, so let's move on to more important aspects.

In 2001, Meta Group identified a set of V-attributes of big data, being:

- Volume.

- Velocity.

- Variety.

This trend was supported by other businesses that added a bit more Vs:

- Veracity.

- Viability.

- Value.

- Variability.

- Visualization.

In all cases, these attributes emphasize that the cornerstone characteristic of big data is not only their physical volume, but other categories that are vital to understanding how intricate data processing and analysis are.

And here's where we as ourselves, "what big data is about?" The simplest answer is that big data is about distributed systems. One could say that if it weren't for distributed systems, big data would be just a chunk of ordinary data. Speaking of distributed systems, there is a very good definition of two serious challenges they present:

2. Deliver a message only once.

1. Ensure the order of message delivery.

2. Deliver a message only once.

But seriously, distributed systems are the core of big data processing. However, these systems turn absolutely useless without data themselves. We move smoothly to the sources of data, which nowadays are plentiful. Today, almost every device connected to the network acts as a source of data.

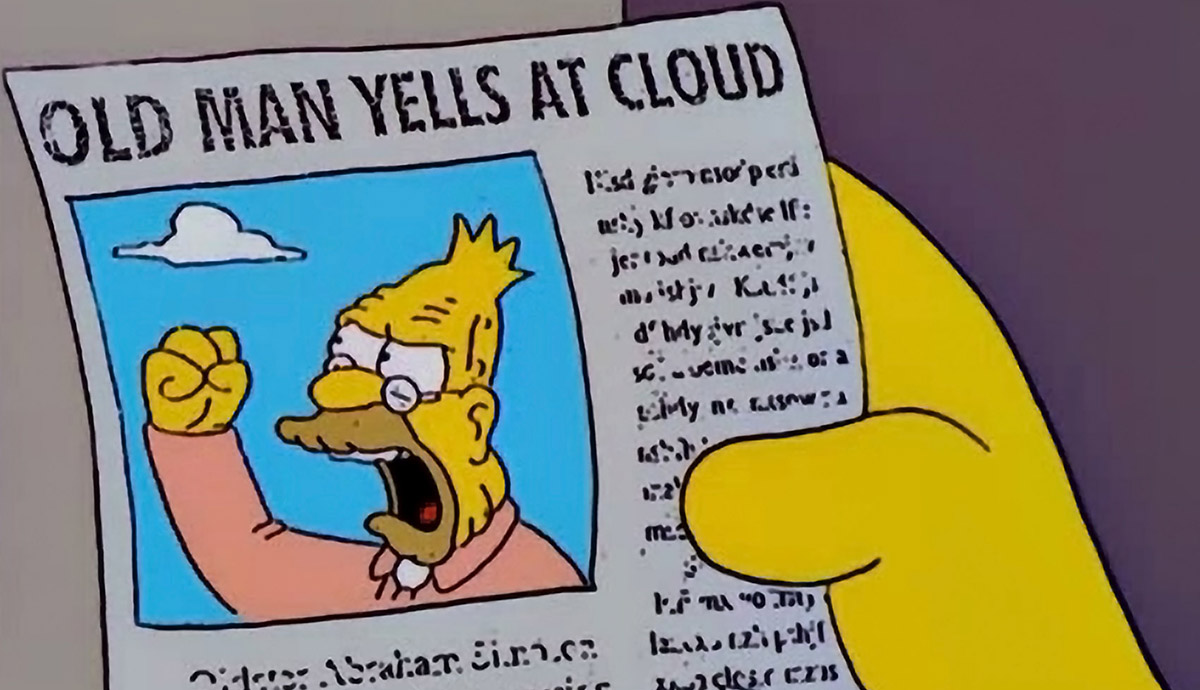

And if everything generates data today, there must be special companies capitalizing on them. Yes, there are lots of such businesses. Even in 2015, there were many, and now there are even more. Though information about startups is a bit outdated, it helps realize the scale of big data application that grows year after year. One meme perfectly fits into the context, when many are frustrated with so much personal data being collected.

«Me yelling at Web 3.0 and other scam into which Internet is turning.»

At this point we can be done with data sources and get to methods and technology of analyzing big data. As a rule, the main methods of analysis are as follows:

- Machine learning and neural networks;

- Data fusion;

- Simulation modeling;

- Genetic algorithms;

- Image recognition;

- Predictive analytics;

- Data mining;

- Network analysis .

Some sources also mention crowdsourcing, visualization, and lots of other methods. One could say that the ends justify the means when it comes to processing big data. And you might even tailor the existing methods and approaches to big data.

If we talk about technology, there is plenty. Most people even create fun websites like this one. For the sake of decency, let's mention some of them.

NoSQL solutions have proved very popular for storing and processing big data, since they are well suited for handling unstructured data. We would single out MongoDB and Apache HBase.

Speaking of processing techniques, everyone has long heard about MapReduce, as it was a rather elegant solution for distributed data processing on a large number of devices. But MapReduce could not exist without Apache Hadoop, since there should be a device for managing a horde of computers and there should be someone to feed data for further processing. In this context, we can't skip Apache Spark, a framework that was designed to process unstructured data.

It's also suitable to mention a couple of languages that have proved effective in terms of handling big data. They are R, Python, and Java / Scala. Java and Scala come together because they utilize the JVM machine, though differ in syntax. Alongside these languages, data engineers also use the old-timer C++, Go (which has proved its worth), and MATLAB (which is popular among scientists). A good example of promising newcomers is Julia, which is missing a solid package base so far.

In fact, one could use almost any programming language to process big data. But the challenge here is the lack of packages designed specifically for big data. For instance, Rust could be a great tool here. But there are not that many libraries tailored to big data processing.

A bottom line here is that technology is developing at full speed, and we are too see even more tools and approaches in the field of big data processing. The one thing that remains the same old truth is that data is the new gold.

We recommend that you read this text, more specifically to Data Landscape 2021.